In our recent data workshop series, business and IT leaders raised practical questions about modern data architecture and AI, covering concerns around governance, speed, workflows, and accuracy:

Will Salesforce Data Cloud duplicate what we’ve already built in Snowflake, Databricks, or BigQuery? Can the architecture scale across the enterprise? How do ERP and other legacy systems fit? Is transactional data too messy to be useful? Which domains should we tackle first? And once the foundation is clear, how do we align departments and build urgency to act?

Here’s how those questions play out—and the answers teams need before they can move forward.

1. We’ve invested heavily in Snowflake, Databricks, or BigQuery. How does Salesforce Data Cloud fit?

Data teams often ask if Salesforce Data Cloud duplicates their lakehouse investment. It doesn’t. They serve different purposes in a modern data architecture.

The lakehouse (Snowflake, Databricks, BigQuery) is the governed system of reference under IT’s control. It’s designed to:

- Store raw and processed data at scale

- Run transformations, aggregations, and advanced calculations (e.g., SQL pipelines, ML feature engineering)

- Apply governance and security policies

- House the semantic layer definitions (metrics, business logic)

Salesforce Data Cloud is the activation layer that connects governed data to Salesforce applications. It’s designed to:

- Resolve identities by stitching customer records across sources

- Harmonize data into unified customer profiles

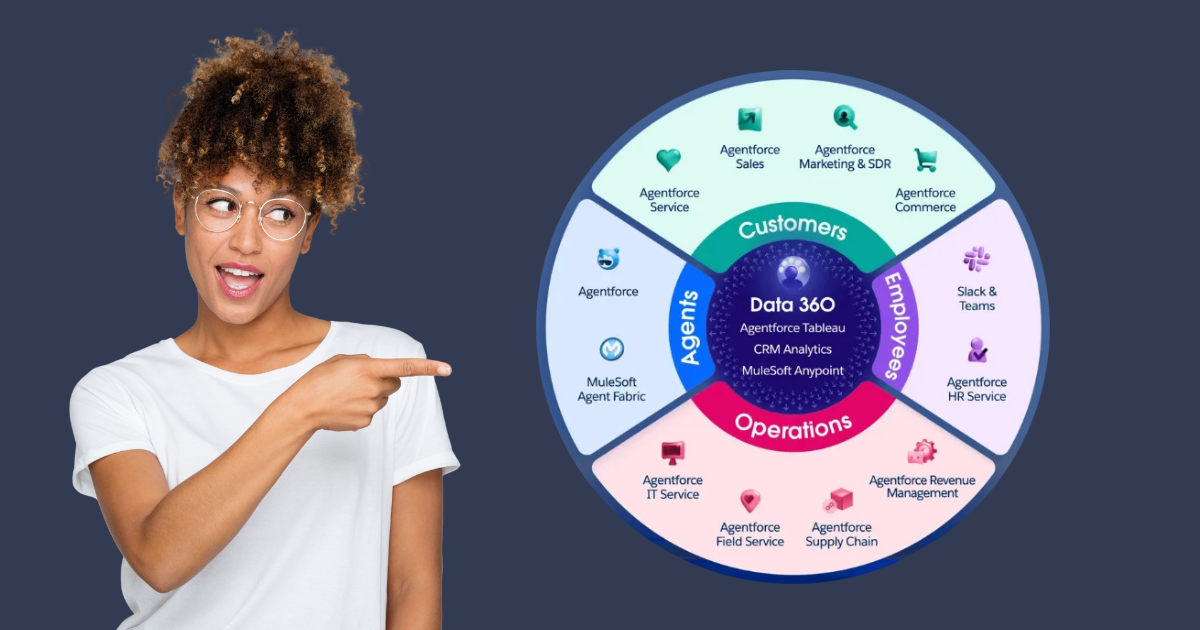

- Activate governed data in Salesforce apps (Sales, Service, Marketing, Commerce)

- Power personalization, segmentation, triggers, and AI inside Salesforce

The lakehouse governs and computes; Data Cloud makes that intelligence usable where business teams work.

2. How do we design a data architecture that scales with the enterprise?

Most enterprises already move data between systems, but often through siloed or duplicative pipelines that don’t hold up as the business grows. More sources are added. More domains are brought in. More teams want access. Without the right foundation, that growth leads to duplicate pipelines, conflicting definitions, and teams building their own workarounds.

A modern data architecture avoids that by following a deliberate sequence:

- Store: The lakehouse (Snowflake, Databricks, BigQuery) is the governed system of reference, built to handle raw and processed data at enterprise scale.

- Transform: In the lakehouse, data is standardized and definitions are applied in a semantic layer so they remain consistent across domains and systems.

- Activate: Salesforce Data Cloud makes that governed, standardized data available in Salesforce, so it’s usable in Sales, Marketing, Service, and beyond.

Because the foundation is designed for expansion, adding a new data domain or use case doesn’t mean re-engineering pipelines. It means extending what’s already governed, consistent, and ready for activation.

3. How do we make ERP and legacy data useful in a modern architecture?

ERP and other core systems aren’t going anywhere—they run critical transactions. But their data isn’t structured to fuel AI, personalization, or predictive workflows in Salesforce.

In modern data architecture, ERP becomes a first-class source. Its data flows into the lakehouse, where it’s standardized and combined with customer, revenue, and product domains. From there, Salesforce Data Cloud activates the governed data inside Salesforce applications.

The result: ERP no longer sits on an island. Finance sees reconciled numbers tied to the same customer records Sales and Marketing are using. Service teams gain operational context without toggling across multiple systems. The ERP continues running operations, while its data strengthens a governed, AI-ready foundation.

4. Is transactional data too messy to be useful?

At first glance, transactional data from ERP or other legacy systems looks unusable: inconsistent formats, null fields, fragmented customer records. Many organizations dismiss it as too messy and miss the value signals hiding inside.

When processed through a modern data architecture, this data becomes indispensable. In the lakehouse, transactional feeds are standardized, enriched, and combined with customer domains. Activated in Salesforce Data Cloud, they support everything from accurate reporting and behavioral segmentation to AI models that detect patterns never revealed by surveys or static attributes.

Support ticket spikes can surface weeks before churn. Usage data in billing systems can flag expansion opportunities early. The data may look messy initially, but with the right architecture, it becomes one of the clearest windows into customer behavior.

5. Which data should we modernize first?

You can’t modernize everything at once. The effort spreads too thin and results stall. The fastest way to build momentum is to start with the datasets that matter to the most teams at once.

For most enterprises, that means customer, revenue, or product data.

- Customer data creates a single profile used by Sales, Marketing, Service, and Finance.

- Revenue data aligns forecasts, pipeline, and reporting.

- Product data supports personalization, expansion, and cost accuracy.

The right entry point varies by industry. In manufacturing, service or supply data may come first. In the public sector, constituent data often leads.

Modernization happens as datasets move through the architecture. They’re stored in the lakehouse as the governed source of truth. They’re transformed by standardizing formats, cleaning inputs, enriching with other sources, and applying shared definitions. Then they’re activated in Salesforce Data Cloud so teams can use the same trusted data in their daily work.

Each dataset modernized this way delivers measurable business outcomes and builds the confidence to tackle the next one.

6. How do we deliver governed data at the speed business teams need?

Data projects often stall because IT and business approach the problem from opposite ends. Business teams push for speed—they need usable data now to launch campaigns, target accounts, or build models. To move quickly, they often create extracts or side systems that get the job done but lead to inconsistencies later. IT, on the other hand, slows things down to enforce governance—adding controls, reviews, and processes that protect the data but delay delivery.

A modern data architecture changes that dynamic. Governance is applied upstream in the lakehouse, where data is tagged, standardized, and defined in the semantic layer. When Salesforce Data Cloud activates that data, those rules carry through into the systems where people work. Salesforce adds contextual controls like role-based access, but the foundation remains consistent.

The result: business teams get governed data fast enough to keep pace with their work, and IT defines rules once instead of reviewing every request downstream.

7. How do we align departments around Salesforce Data Cloud?

Business teams usually see the appeal quickly. Marketing wants richer segmentation, Sales wants better account prioritization, and Service wants clearer retention signals. The hesitation often comes from IT, which sees Data Cloud as a layer on top of the lakehouse they already manage. From their perspective, it can look redundant.

The shift occurs when IT and the business both see that Data Cloud isn’t another silo—it’s how the same governed data is activated across functions. A customer value score defined once in the semantic layer and calculated in the lakehouse shows up in Salesforce as a targeting variable for Marketing, a prioritization score for Sales, a renewal trigger for Service, and a reconciled metric for Finance.

When every team sees their needs tied to the same governed foundation, resistance fades. The conversation shifts from “Do we need this?” to “How fast can we get it working?”

8. What happens if we wait to modernize our data architecture?

It’s tempting to hold off on data modernization until AI use cases feel clearer. But waiting creates its own risks. Establishing semantic consistency, building zero-copy integrations, and activating priority datasets in Salesforce take time—anywhere from 6 to 12 months.

If your organization expects to see AI-driven personalization, automation, or predictive insights on the same horizon, the foundation has to be in place well before then. Modernization isn’t a switch you flip when the use case arrives—it’s the groundwork that makes those use cases possible.

Data Modernization as the Operating Model

The real takeaway from these questions is that data modernization is about creating an architecture and operating model that makes data trustworthy, shareable, and ready for activation across the enterprise.

When that model is in place, executives stop disagreeing about numbers, business teams move faster without workarounds, and IT defines governance once instead of policing every request. And because the foundation is connected and consistent, AI can be introduced with confidence instead of risk.

If you’re ready to define what this looks like for your organization, Coastal’s Data Strategy Lab helps leadership teams set priorities and plot the next steps required to modernize your data and launch AI-ready systems with clarity and less risk.